In a significant advancement in artificial intelligence, Alibaba has unveiled Qwen2.5-Max, a large-scale Mixture-of-Experts (MoE) model that has been pretrained on over 20 trillion tokens. This model has undergone further refinement through Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). The company asserts that Qwen2.5-Max surpasses existing models in various benchmarks, marking a notable milestone in AI development.

Performance Evaluation

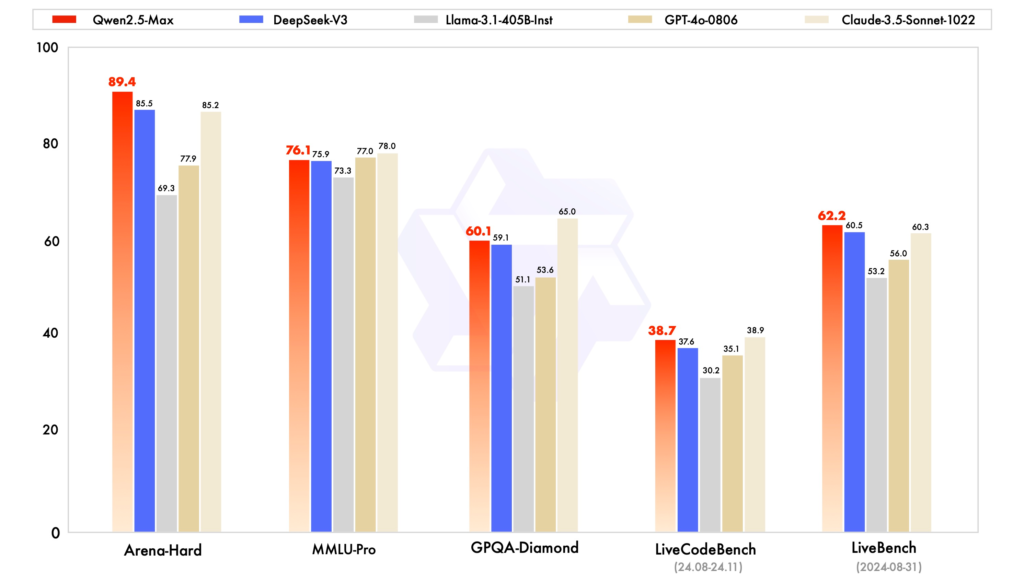

Qwen2.5-Max has been rigorously evaluated against leading models across several benchmarks of interest to the AI community. These include MMLU-Pro, which assesses knowledge through college-level problems; LiveCodeBench, evaluating coding capabilities; LiveBench, testing general capabilities comprehensively; and Arena-Hard, approximating human preferences. The findings indicate that Qwen2.5-Max outperforms models such as DeepSeek V3 in benchmarks like Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond, while also demonstrating competitive results in assessments like MMLU-Pro.

Comparison with Base Models

In comparisons involving base models, Qwen2.5-Max was evaluated against DeepSeek V3, a leading open-weight MoE model; Llama-3.1-405B, the largest open-weight dense model; and Qwen2.5-72B, among the top open-weight dense models. The results revealed that Qwen2.5-Max’s base models exhibit significant advantages across most benchmarks, suggesting that advancements in post-training techniques could further enhance its performance in future iterations.

| Feature | Qwen 2.5-Max | GPT-4o (OpenAI) | DeepSeek-V3 | Claude 3.5 Sonnet (Anthropic) |

| Architecture | MoE (72B) | Dense | Dense | Dense |

| Training Tokens | 20T | Undisclosed | 6T | Undisclosed |

| Context Window | 128K tokens | 32K tokens | 128K tokens | 100K tokens |

| Coding (HumanEval) | 92.7% | 90.1% | 88.9% | 85.6% |

| Cost per Million Tokens | $0.38 | $5.00 | $0.25 | $3.00 |

| Open Source? | No | No | Yes | No |

Accessing Qwen2.5-Max

Qwen2.5-Max is now accessible through Qwen Chat, allowing users to interact directly with the model, explore artifacts, and utilize search functionalities. Additionally, the API for Qwen2.5-Max is available via Alibaba Cloud. Users can register for an Alibaba Cloud account, activate the Model Studio service, and create an API key to integrate Qwen2.5-Max into their applications. The APIs are compatible with OpenAI’s API, facilitating a seamless integration process.

Future Directions

Alibaba emphasizes that scaling data and model size not only enhances model intelligence but also reflects a commitment to pioneering research. The company is dedicated to improving the reasoning capabilities of large language models through innovative applications of scaled reinforcement learning. This approach aims to enable models to transcend human intelligence, unlocking the potential to explore uncharted territories of knowledge and understanding.

Contextualizing Qwen2.5-Max in the AI Landscape

The release of Qwen2.5-Max comes amid significant developments in the AI industry. Notably, DeepSeek, a Chinese AI startup, has garnered attention with its open-source R1 model, which competes with leading AI models while utilizing fewer resources. This development has disrupted the market, challenging established tech giants and highlighting the rapid advancements in AI capabilities.

In response to these advancements, industry leaders are reevaluating their strategies. For instance, OpenAI CEO Sam Altman has indicated plans to adopt new AI approaches inspired by DeepSeek and Meta, reflecting a shift towards more transparent and collaborative AI development.

Conclusion

Alibaba’s introduction of Qwen2.5-Max represents a significant step forward in AI development, showcasing the potential of large-scale MoE models. As the AI landscape continues to evolve with rapid advancements and increased competition, models like Qwen2.5-Max highlight the importance of innovation and collaboration in driving the field forward.