In a bold stride toward reshaping the future of artificial intelligence, Safe Superintelligence Inc. (SSI)—the secretive AI startup led by OpenAI co-founder Ilya Sutskever—has raised a staggering $2 billion in fresh capital, cementing its position as one of the most valuable AI ventures today. With a current valuation of $32 billion, the startup has managed to rally support from some of the tech industry’s most influential players, including Alphabet (Google’s parent company) and Nvidia.

A Vision Anchored in Safety Over Speed

Founded in June 2024, SSI has set itself apart in a crowded AI landscape with a laser-sharp focus on building “safe superintelligence”—AI systems more intelligent than humans, but explicitly designed to remain beneficial, controllable, and aligned with human values. This mission is not merely philosophical; it’s foundational to SSI’s operational strategy.

The startup was co-founded by three seasoned technologists:

- Ilya Sutskever, former chief scientist at OpenAI, known for his work on generative AI systems including ChatGPT.

- Daniel Gross, an AI visionary and former Apple AI lead.

- Daniel Levy, a key figure in OpenAI’s technical staff.

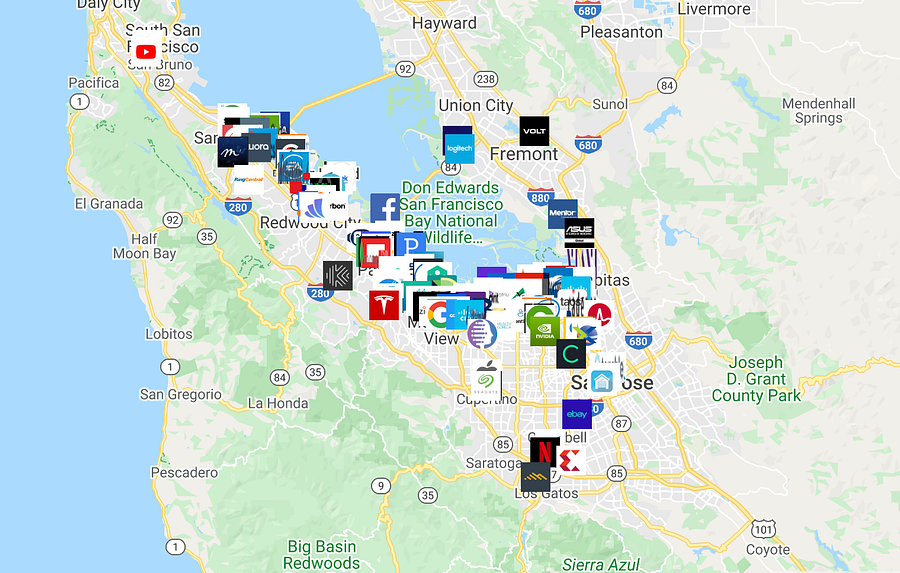

Operating out of Tel Aviv and Palo Alto, SSI is selectively hiring elite engineers to construct what it calls “a lean, cracked team” solely focused on solving what it believes is the greatest technical challenge of our time.

Strategic Backing from Tech Giants

The recent $2 billion funding round was led by Greenoaks Capital with significant contributions from:

- Alphabet, which is also providing SSI with access to its proprietary Tensor Processing Units (TPUs) through Google Cloud.

- Nvidia, the dominant force in AI hardware, reinforcing its continued investment in the generative AI ecosystem.

- Venture heavyweights such as Andreessen Horowitz, Lightspeed Venture Partners, DST Global, SV Angel, and Nat Friedman’s NFDG.

The round follows a prior $1 billion raise in September 2024, which valued SSI at $5 billion. The meteoric rise to a $32 billion valuation underscores unprecedented investor confidence in Sutskever’s vision and pedigree.

Google’s TPU Gambit: A Hardware Arms Race

One of the most pivotal developments in this round is SSI’s deepening collaboration with Google Cloud, making SSI an anchor customer for its TPU hardware. These chips, once reserved for internal use, are now being strategically deployed to high-potential AI labs. This move signals Google’s growing ambition to compete directly with Nvidia in the AI compute market.

“With these foundational model builders, the gravity is increasing dramatically over to us,” said Darren Mowry, head of Google’s startup partnerships, in an interview with Reuters.

While Nvidia’s GPUs still dominate the market, SSI’s reliance on TPUs suggests a strategic bet on efficiency and specialization, especially in large-scale training of frontier models.

A Startling Absence: No Product Yet, Just a Promise

Despite its massive valuation, SSI has yet to release a product or even publicly demo any core technology. Its minimalist website serves more as a manifesto than a company portal, featuring only a mission statement:

“Building Safe Superintelligence is the most important technical problem of our time. We are focused on it—and nothing else.”

This has not dissuaded investors. On the contrary, the company’s stealth mode and singular focus are perceived as strengths in a field often distracted by short-term gains.

Fallout from OpenAI: A Departure with Consequences

Sutskever’s departure from OpenAI in May 2024 followed an internal leadership rift, where he was implicated in an unsuccessful attempt to remove CEO Sam Altman. This dramatic exit ended nearly a decade of collaboration that began in 2015, when the two launched OpenAI with the ambition of ensuring that AGI benefits humanity.

With SSI, Sutskever appears determined to correct what he perceives as a growing misalignment in the AI arms race—placing safety, transparency, and governance at the heart of AGI development.

The Bigger Picture: AI Safety as a Market Driver

SSI’s trajectory reflects a broader shift in how the AI industry perceives existential risk and ethical design. Unlike more commercial ventures, SSI is choosing to frontload safety research, even at the cost of slower time-to-market. This mirrors similar ambitions seen in other safety-focused labs like Anthropic, also backed by Google and Amazon.

Given the scale of the funding and the credibility of its backers, SSI now sits at the epicenter of the global AI safety conversation. It is positioned not just as a tech player, but as a bellwether for how next-gen superintelligence might be born—and governed.

Final Thoughts

With its $2 billion war chest, top-tier talent pipeline, and strategic partnerships with cloud and hardware giants, Safe Superintelligence is quietly building the foundation for what could become one of the most impactful technological breakthroughs of the century. But with great promise comes immense responsibility. The world will be watching not just what they build—but how safely they build it.

For continued coverage on AI safety breakthroughs and industry power plays, stay tuned to Superintelligence News.