The rapidly growing AI inference market, fueled by applications like OpenAI’s ChatGPT, presents a lucrative opportunity for companies looking to capture a slice of this expanding pie. While Nvidia currently dominates this market, Cerebras Systems, a Silicon Valley AI startup, is making bold claims that it can outpace traditional GPUs with its wafer-scale engine (WSE) technology, promising a transformative leap in AI inference performance.

Wafer-Scale Engine: A Game-Changer in AI Inference

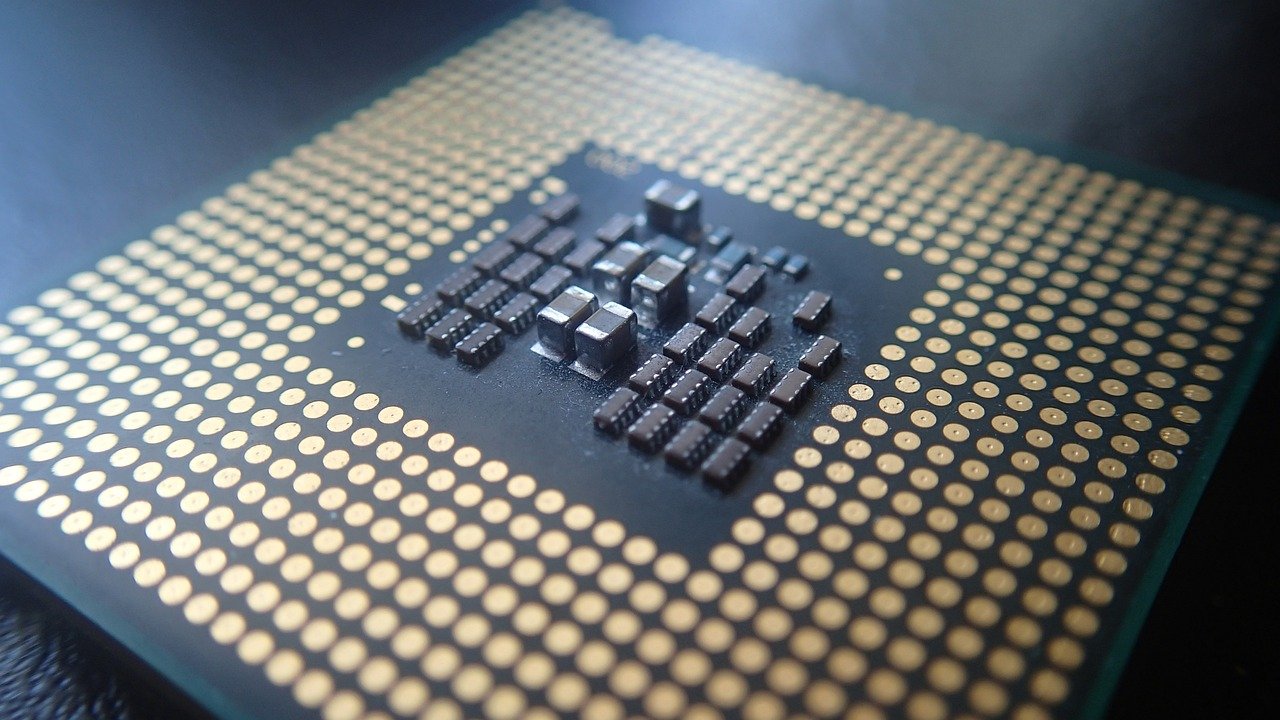

Cerebras Systems, known for its AI training computers, is now pivoting towards providing inference services, leveraging its unique wafer-scale technology. Unlike traditional chips, Cerebras’ WSE-3 chip is the size of a dinner plate and contains up to 900,000 cores integrated on a single wafer. This design eliminates the need for external connections between chips, which significantly reduces power consumption and costs, while massively boosting processing speed.

The core innovation lies in how the chip handles AI model weights. By placing these weights directly onto the chip’s cores, the WSE-3 drastically reduces data transfer times, allowing for faster and more efficient AI inference. Andy Hock, Cerebras’ Senior Vice President of Product and Strategy, explained, “We load the model weights onto the wafer, so they’re right next to the core, facilitating rapid data processing without slower external interfaces.”

Unmatched Speed and Efficiency Claims

Cerebras claims its WSE technology delivers AI inference speeds that are 10 to 20 times faster than conventional cloud AI services powered by Nvidia’s leading GPUs. The company demonstrated this by running Meta’s open-source LLaMA 3.1 AI model, processing up to 1,800 tokens per second on an 8-billion parameter model—far outpacing the 260 tokens per second achievable by top-of-the-line GPUs.

Andrew Feldman, co-founder and CEO of Cerebras, emphasized that this speed advantage not only enhances the processing of AI tasks but also enables new possibilities in complex, multi-query, and real-time applications. For instance, models could generate more accurate and contextually relevant responses in natural language processing tasks, enhance real-time voice recognition systems, and improve healthcare diagnostics by rapidly processing large datasets.

Overcoming Industry Giants

Cerebras’ bold entry into the AI inference space comes with significant challenges. Nvidia’s dominance is underpinned by its CUDA platform, which has built a robust ecosystem around its GPUs. However, Cerebras’ WSE requires software adaptation due to its distinct architecture. To bridge this gap, Cerebras offers support for popular AI frameworks like PyTorch and provides its own software development kit, easing the transition for developers.

Cerebras plans to offer its inference service through an API accessible via its cloud infrastructure, allowing companies to utilize the technology without major overhauls of their existing setups. Future plans include support for larger models, such as the LLaMA 405-billion parameter model, alongside models from Mistral and Cohere.

Pushing the Boundaries of AI Inference

Cerebras isn’t just competing on speed; it’s aiming to redefine what’s possible in AI inference. Feldman highlighted the importance of “chain-of-thought prompting,” a method where AI models generate more accurate answers by breaking down problems step-by-step. This process becomes feasible with the speed Cerebras offers, enabling more detailed, rigorous responses that improve the quality of AI outputs.

Additionally, Feldman pointed out that the rapid processing speeds allow for expanded context windows—meaning AI models can analyze and compare longer or multiple documents seamlessly. This capability could lead to more sophisticated, agentic AI systems that interact with multiple data sources and applications to deliver highly accurate results.

Looking Ahead: Real-World Validation and Market Adoption

Despite the promising technology, analysts caution that Cerebras’ claims must be validated through real-world applications and independent benchmarks. The market remains highly competitive, and Nvidia’s newer Blackwell chips could pose a formidable challenge in the near future.

Cerebras’ approach—shifting from selling hardware to offering inference-as-a-service—reflects the broader trend in AI where speed and efficiency are key differentiators. If Cerebras can deliver on its promises, it could reshape the AI inference landscape, offering companies a faster, cost-effective alternative to existing GPU-based solutions.

As the AI arms race heats up, Cerebras’ innovative technology represents a significant step forward, but its ultimate success will hinge on adoption, real-world performance, and continued advancements in AI hardware.

Cerebras’ bold bet on wafer-scale technology sets the stage for a new era of AI inference, challenging established norms and pushing the boundaries of what’s possible in the field. With the AI inference market poised for explosive growth, the competition between Cerebras and Nvidia will be one to watch closely.