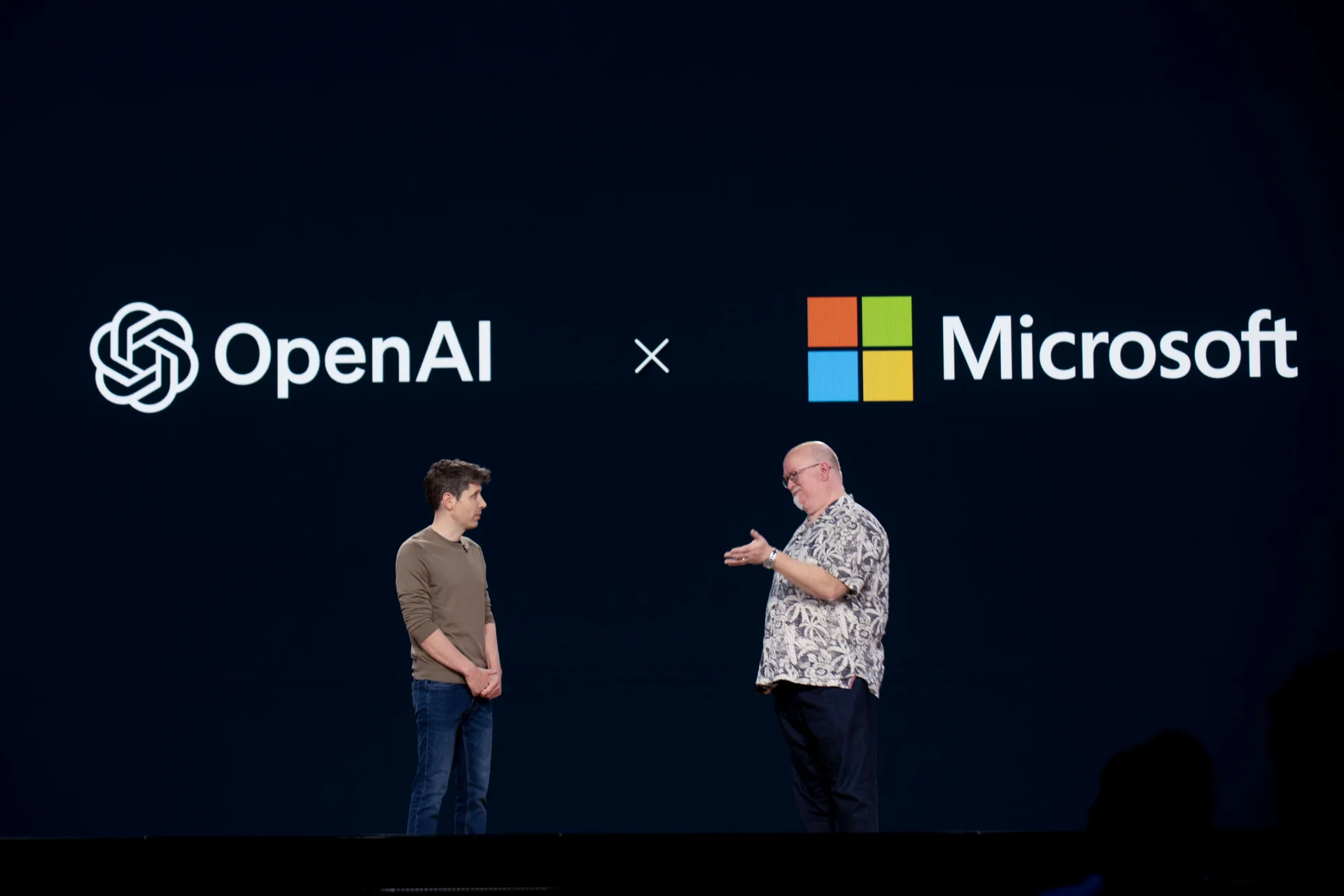

OpenAI’s unusual nonprofit–for-profit architecture is about to be rewritten—and Microsoft is both enabler and hedger. In the same breath that the partners announced a non-binding memorandum of understanding (MOU) to “formalize the next phase” of their relationship, OpenAI said its nonprofit parent would hold more than $100 billion in equity in the for-profit vehicle it plans to convert into a Public Benefit Corporation (PBC). Meanwhile, Microsoft is doubling down on training its own frontier models on ever-larger GPU clusters—insurance if the partnership’s center of gravity shifts again.

What changed—exactly?

- A non-binding MOU, binding implications: Microsoft and OpenAI jointly say they’ve signed an MOU and are “actively working to finalize contractual terms.” The announcement is deliberately sparse on commercial specifics, but it confirms the new phase is real.

- OpenAI’s nonprofit to hold a $100B+ equity stake: The nonprofit will control the PBC and receive a massive equity stake—framed as creating one of the most well-resourced philanthropic organizations in the world. That structure is meant to couple mission control with direct financial participation.

- Regulatory gatekeepers: The recapitalization and control changes require approvals—California and Delaware attorneys general are already engaged. The timing matters: OpenAI has signaled it wants to complete the conversion this year.

Why the MOU now?

- Clearer capital path: OpenAI is targeting a $500B valuation under the new for-profit structure. A cleaner, PBC-based governance model could ease future fundraising—and even tee up an eventual IPO.

- Rebalancing exclusivities: Under prior terms, Microsoft had preferred access and Azure exclusivity for OpenAI APIs. Those core elements continue through 2030, but the new arrangement introduces more flexibility: OpenAI can explore additional cloud partners, including Oracle and even Google, primarily for research and training.

- Safety front-and-center: Both companies tied the new phase explicitly to safety; the PBC charter will enshrine that safety decisions are guided by the nonprofit mission to ensure AGI benefits everyone.

Microsoft’s hedge: build world-class models in-house

- From MAI-1-preview to much bigger training runs: Microsoft confirmed its first homegrown models—MAI-1-preview (text) and MAI-Voice-1 (speech). MAI-1-preview was trained on roughly 15,000 NVIDIA H100 GPUs—small compared to rivals—but leadership says the company is already planning clusters 6–10× larger to pursue frontier-class capability.

- Pragmatic multi-model strategy: Microsoft plans to “support multiple models” in products like Copilot, using the best model for the job (including third-party where it wins) even as it becomes more self-reliant.

- Massive capex & supply implications: Scaling from 15k H100s to 6–10× implies tens of thousands more accelerators, new datacenter footprints, power, and cooling—consistent with its pledge to make “significant investments” in its AI clusters.

How the new OpenAI structure is supposed to work

- Control + upside: The nonprofit board remains the ultimate authority (e.g., over AGI determinations) while holding a huge equity stake in the PBC. As the PBC scales, nonprofit resources scale too—funding grants and safety initiatives.

- Approvals and litigation: Beyond AG approvals, OpenAI still faces scrutiny from nonprofit watchdogs and lawsuits. Expect legal and policy challenges around whether charitable assets and mission are adequately protected in the new regime.

What it means for customers, developers, and rivals

- Azure remains central, but not alone: Microsoft still touts Azure exclusivity for the OpenAI API through 2030 and rights to use OpenAI IP inside products, while the MOU era normalizes multi-cloud compute for OpenAI research/training. For customers, that likely means more capacity, more regions—and fewer outages during peak demand.

- A broader model menu inside Microsoft 365 and GitHub: Microsoft’s plan to mix models (OpenAI, Anthropic, Microsoft MAI) inside Copilot could yield faster feature iteration and better task-fit—while raising interesting benchmarking and transparency questions.

- Competitive chessboard: As OpenAI diversifies cloud partners and Microsoft accelerates its own frontier efforts, expect a more arm’s-length co-opetition. The MOU may ultimately reduce the chance of a messy breakup by giving each side what it needs: capital & optionality for OpenAI; access & insurance for Microsoft.

Key risks and open questions

- It’s still an MOU: Non-binding means details could change. Watch for a definitive agreement spelling out IP access, exclusivity (if any), revenue-sharing, and AGI-trigger clauses.

- Regulatory timing: California/Delaware AG approvals could alter timelines or impose conditions on governance, safety controls, or charitable-asset protections.

- Governance reality vs. theory: Will a nonprofit with a >$100B stake truly constrain commercial incentives when safety calls are costly? Execution will be everything.

- Compute & energy constraints: Building clusters 6–10× larger than 15k H100s is not just procurement; it’s power, cooling, and data center geography at industrial scale. Delays here blunt Microsoft’s hedge.

The bottom line

This is a high-wire act: OpenAI gets a route to raise capital and codify mission control via a PBC while Microsoft preserves access and hedges with its own frontier models. If regulators sign off and the definitive agreement maintains safety and openness commitments, the industry could see more capacity, a broader model mix, and sharper competition at the very top of the model stack.