OpenAI has recently unveiled the o1-preview model, an advanced large-scale AI system that has demonstrated outstanding performance across multiple challenging benchmarks. With its powerful reasoning capabilities, o1 ranks in the 89th percentile on Codeforces (a competitive programming platform), excels in the American Invitational Mathematics Examination (AIME), and surpasses human PhD-level performance on a benchmark for physics, biology, and chemistry (GPQA). Despite the model’s ongoing development to enhance user accessibility, an early version, OpenAI o1-preview, is now available in ChatGPT and to trusted API users.

Revolutionary Reinforcement Learning Drives o1’s Success

OpenAI’s o1 model utilizes a novel reinforcement learning algorithm that effectively teaches the AI how to think critically and productively. The model’s learning strategy focuses on leveraging a “chain of thought” approach, allowing it to break down complex problems into simpler, manageable parts. This method not only improves the model’s reasoning skills but also enhances data efficiency during training.

The training process of o1 shows a consistent improvement in performance with increased train-time and test-time compute. This differs from traditional approaches used in large language model (LLM) pretraining, where scaling relies heavily on dataset size and architecture. OpenAI continues to explore and refine these distinct scaling strategies, highlighting the model’s adaptability to computational constraints.

Performance Highlights: Competitive Programming and Mathematical Reasoning

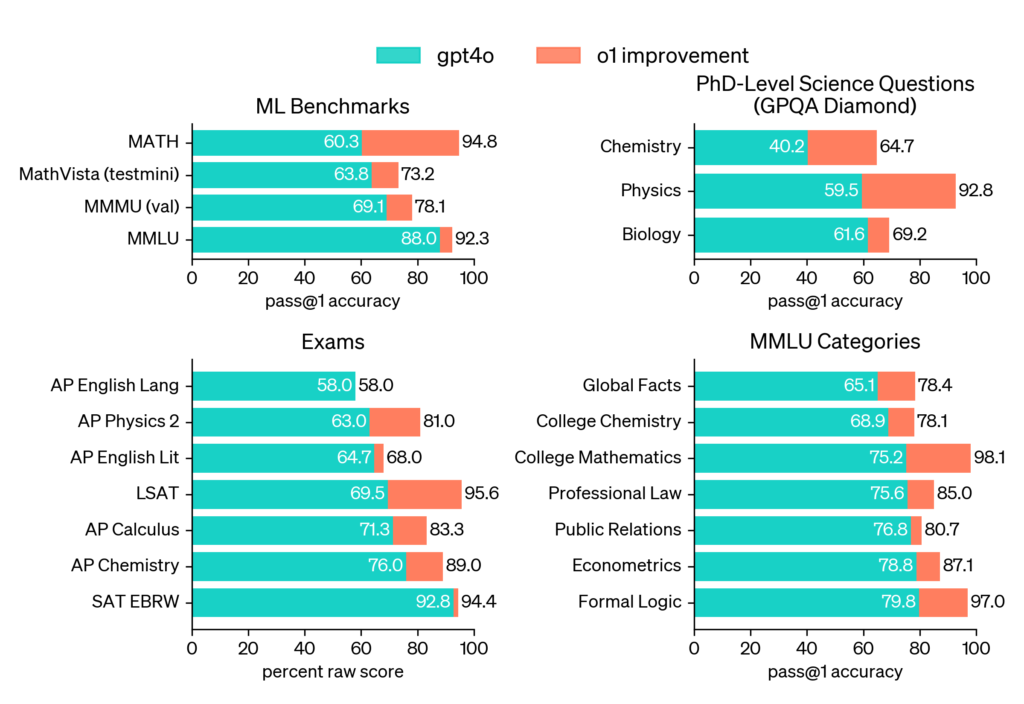

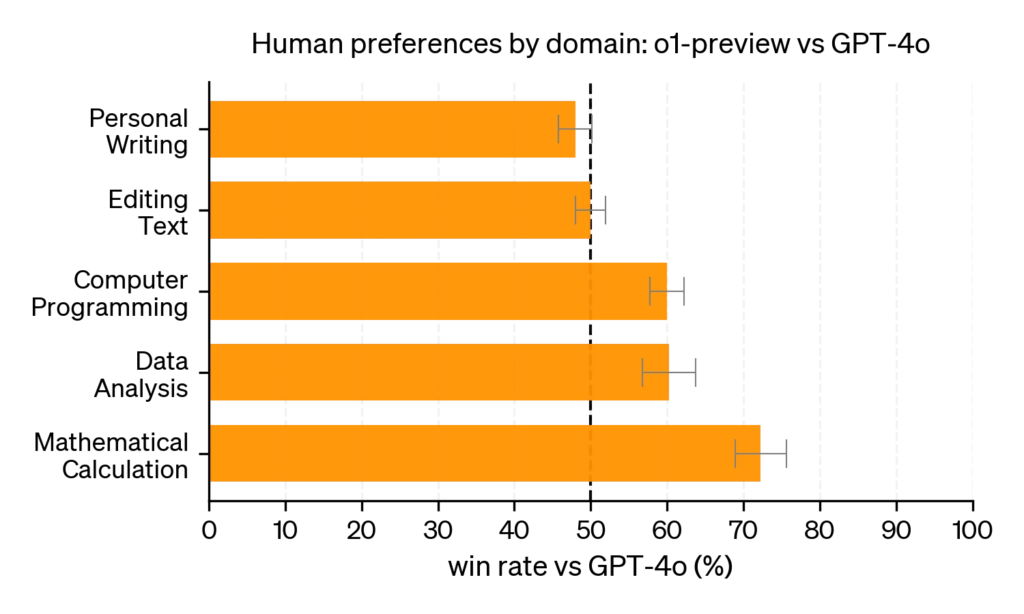

To demonstrate o1’s reasoning enhancements over its predecessor, GPT-4o, OpenAI subjected the model to a wide array of human exams and machine learning benchmarks. The results were impressive, as o1 consistently outperformed GPT-4o on most reasoning-intensive tasks.

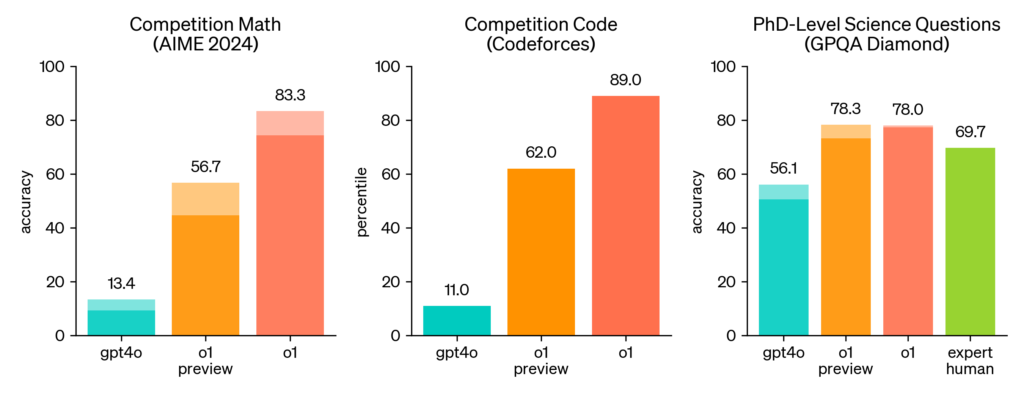

- Codeforces Performance: o1 ranks in the 89th percentile on Codeforces, positioning it among the top competitive programmers globally. This achievement underscores the model’s ability to tackle complex coding challenges that require advanced problem-solving skills.

- Mathematical Excellence at AIME: The model’s mathematical prowess was evaluated using the AIME, a highly challenging exam targeting the brightest high school math students in the United States. While GPT-4o managed to solve only 12% of the problems, o1’s performance was exceptional:

- Single Sample Accuracy: Solved 74% of the problems.

- Consensus of 64 Samples: Achieved 83% accuracy.

- Re-ranking 1000 Samples: Reached 93% accuracy, placing o1 among the top 500 students nationally, surpassing the threshold for the USA Mathematical Olympiad.

These results demonstrate the model’s ability to approach and solve problems with a strategic thought process, mirroring the reasoning employed by top-tier human competitors.

Surpassing Human Experts in Science: GPQA Diamond Benchmark

One of o1’s most significant achievements is its performance on the GPQA Diamond benchmark, which tests expertise in physics, chemistry, and biology. OpenAI recruited PhD-level experts to compare against the model, and the results were groundbreaking. For the first time, an AI model outperformed human experts on this rigorous benchmark, setting a new standard in AI-driven scientific reasoning.

However, it is essential to note that while o1 excels at solving specific problems typically within a PhD’s domain, it does not imply overall superiority in all aspects of expert-level reasoning.

Broader Benchmark Achievements: MMLU and Beyond

OpenAI further assessed o1 across various benchmarks, including the Multi-Task Language Understanding (MMLU) evaluation, where the model outperformed GPT-4o in 54 out of 57 subcategories. With vision perception capabilities activated, o1 achieved a score of 78.2% on the MMLU, making it one of the first models to compete effectively with human experts on this benchmark.

Chain-of-Thought: A Game-Changing Approach

A standout feature of o1 is its use of the chain-of-thought methodology, where the model mimics human-like reflective thinking when tackling difficult questions. Through reinforcement learning, o1 learns to refine its thought process, systematically breaking down complex tasks, correcting errors, and exploring alternative solutions when faced with obstacles.

This strategic approach significantly boosts the model’s reasoning capabilities, enabling it to excel in benchmarks that require deep, logical thinking. OpenAI’s chain-of-thought strategy allows o1 to iteratively improve its responses, thereby closing the gap between machine learning models and human expert reasoning.

| Metric | GPT-4o | o1-preview |

|---|---|---|

| % Safe completions on harmful prompts Standard | 0.990 | 0.995 |

| % Safe completions on harmful prompts Challenging: jailbreaks & edge cases | 0.714 | 0.934 |

| ↳ Violent or Criminal Harassment (general) | 0.845 | 0.900 |

| ↳ Illegal sexual content | 0.483 | 0.949 |

| ↳ Illegal sexual content involving minors | 0.707 | 0.931 |

| ↳ Violent or criminal harassment against a protected group | 0.727 | 0.909 |

| ↳ Advice about non-violent wrongdoing | 0.688 | 0.961 |

| ↳ Advice about violent wrongdoing | 0.778 | 0.963 |

| ↳ Advice or encouragement of self-harm | 0.769 | 0.923 |

| % Safe completions for top 200 with highest Moderation API scores per category in WildChat Zhao, et al. 2024 | 0.945 | 0.971 |

| Goodness@0.1 StrongREJECT jailbreak eval Souly et al. 2024 | 0.220 | 0.840 |

| Human sourced jailbreak eval | 0.770 | 0.960 |

| % Compliance on internal benign edge cases “not over-refusal” | 0.910 | 0.930 |

| % Compliance on benign edge cases in XSTest “not over-refusal” Röttger, et al. 2023 | 0.924 | 0.976 |

Conclusion: A New Frontier in AI Reasoning and Performance

The OpenAI o1-preview model marks a significant leap forward in AI capabilities, particularly in fields that demand high-level reasoning and problem-solving skills. With its outstanding performance on competitive programming platforms, high school mathematics exams, and PhD-level scientific benchmarks, o1 sets a new standard for AI-driven academic and professional excellence.

As OpenAI continues to refine and scale this model, the potential applications of o1 in real-world scenarios are vast, ranging from advanced tutoring systems to complex scientific research. The early release of o1-preview to ChatGPT and select API users signals an exciting new era of AI development, where machine intelligence rivals and even surpasses human expertise in key areas.