LinkedIn, the popular professional networking platform owned by Microsoft, recently revealed that it is using user data to train artificial intelligence (AI) models. Without clear communication or consent, many users may not even realize that their personal data—including profile information, posts, and other LinkedIn content—is being used to enhance AI-driven features on the platform. Here’s a closer look at what’s happening, how it affects you, and what you can do to protect your privacy.

LinkedIn’s Quiet Data Collection for AI Training

About a week ago, LinkedIn quietly updated its policies, revealing that it is utilizing user data for AI model training. This data includes information from user profiles, posts, and other activities on the platform. According to LinkedIn, these AI models are used to power various AI-driven features across LinkedIn and its “affiliates.” However, LinkedIn has not specified which affiliates are involved, though it’s important to note that LinkedIn is owned by Microsoft, a company deeply embedded in the AI ecosystem, with strong financial connections to OpenAI.

When approached for comment, LinkedIn clarified that its “affiliates” refer to any Microsoft-owned companies, which include over 270 acquisitions since 1986. The company, however, emphasized that while LinkedIn uses OpenAI models via Microsoft’s Azure AI service, it does not share user data directly with OpenAI.

Policy Changes and Lack of Transparency

Despite these significant changes, LinkedIn’s legal documents—including its Pages Terms, User Agreement, Privacy Policy, and Copyright Policy—do not specifically mention AI or artificial intelligence. Instead, they broadly state that LinkedIn can “access, store, process and use any information and personal data that you provide.” However, on September 19, 2024, LinkedIn announced updates to its User Agreement and Privacy Policy, explicitly mentioning that it uses user data to train AI models.

Blake Lawit, LinkedIn’s Senior Vice President and General Counsel, explained in a recent post: “We have added language to clarify how we use the information you share with us to develop the products and services of LinkedIn and its affiliates, including by training AI models used for content generation.” This marks a clear shift towards openly acknowledging the use of personal data in AI training, a move that aligns LinkedIn with other tech giants like Meta, which recently admitted to similar data usage.

Special Protections for EU Users

One critical detail that has sparked concern is the disparity in protections based on geographical location. LinkedIn has opted to automatically exclude users from the European Union (EU), European Economic Area (EEA), and Switzerland from AI data collection, thanks to stringent data privacy regulations in these regions. For users in these areas, LinkedIn will not collect data for AI training “until further notice.” However, users outside these jurisdictions, such as those in the UK and the US, are automatically included unless they actively opt out.

How to Opt-Out of LinkedIn’s AI Data Training

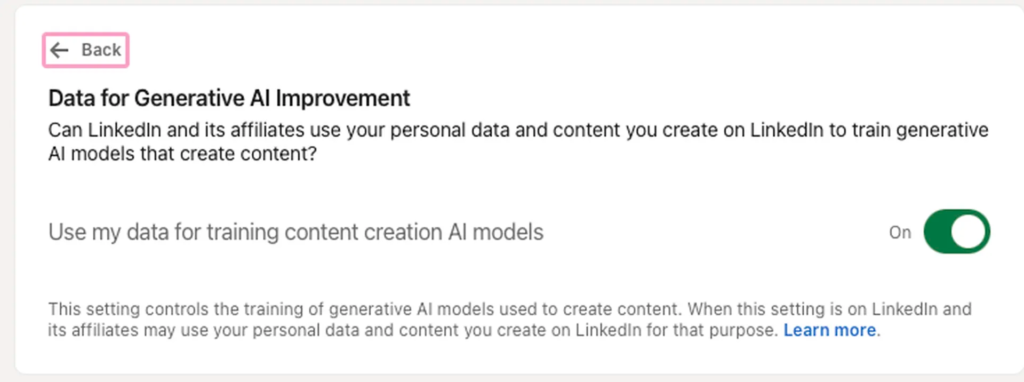

LinkedIn’s new data policy allows users to opt-out of having their data used for training generative AI models. However, the opt-out process is not straightforward. Users must navigate to the “Data for Generative AI Improvement” setting in their account’s privacy tab. This setting, which recently appeared for users in the UK and US, allows them to disable the use of their data for generative AI training.

To opt-out:

- Go to your LinkedIn account settings.

- Navigate to the “Data privacy” tab.

- Find “Data for Generative AI Improvement” and toggle it to “off.”

However, it’s important to note that opting out only stops future data usage. LinkedIn states, “Opting out means that LinkedIn and its affiliates won’t use your personal data or content on LinkedIn to train models going forward, but does not affect training that has already taken place.”

Moreover, LinkedIn also employs other machine learning tools for purposes such as content personalization and moderation that do not generate content but still use user data. To prevent your data from being used in these other applications, you must also fill out the LinkedIn Data Processing Objection Form, a separate step that many users may overlook.

Privacy Concerns and Industry Implications

LinkedIn’s quiet opt-in policy and the challenges associated with opting out have raised significant privacy concerns among users. Many argue that such practices should require explicit user consent, especially given the potentially sensitive nature of professional data. Critics have called for more transparency from LinkedIn and its parent company Microsoft, urging them to compensate users whose data is used for AI training.

The issue also underscores a broader trend among tech companies that use personal data for AI development without adequate disclosure or consent mechanisms. Meta recently faced backlash for a similar practice, admitting that it had scraped non-private user data for model training dating back to 2007. As AI continues to evolve, the debate around data privacy, user rights, and ethical AI development is likely to intensify.

What’s Next for LinkedIn Users?

For now, LinkedIn users need to take proactive steps to protect their data from being used in AI training. While the platform’s updated privacy settings and opt-out forms provide some control, the onus is on users to navigate LinkedIn’s complex privacy landscape. As the conversation around data ethics grows, it remains to be seen whether LinkedIn will further refine its policies or introduce more transparent and user-friendly privacy practices.

In the meantime, staying informed and actively managing your LinkedIn privacy settings is the best way to safeguard your data from being leveraged in ways you might not have agreed to.