A Bold Leap into Agentic AI

Google has officially unveiled Gemini 2.0, its next-generation AI model, signaling a groundbreaking shift towards AI systems capable of independent action. This development not only enhances the capabilities of its predecessor but also lays the foundation for a new era of “agentic” AI, where systems are designed to think ahead, plan tasks, and take supervised actions autonomously.

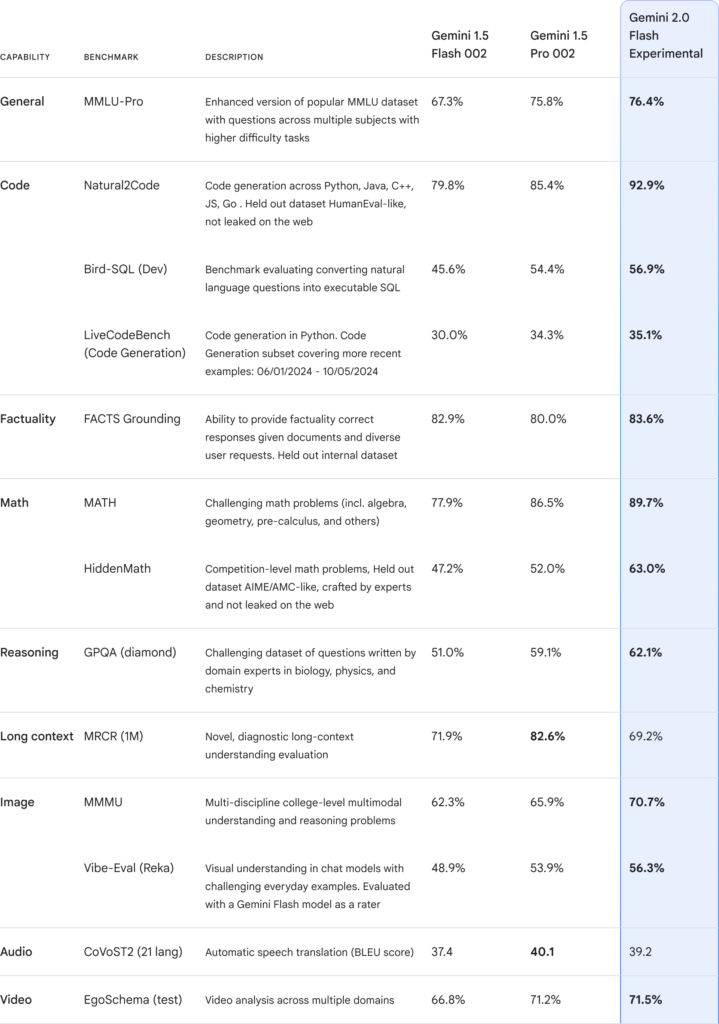

Launching exactly one year after the debut of the Gemini family, this release features Gemini 2.0 Flash, an experimental, high-performance version optimized for speed and scalability. With multimodal capabilities such as native image and audio generation, Google is racing ahead to define the future of personal computing, search, and beyond.

Enhanced Multimodal and Agentic Features

At the core of Gemini 2.0 lies a suite of advanced features that elevate its utility:

- Multimodal Inputs and Outputs:

Gemini 2.0 integrates seamlessly across text, image, video, and audio inputs, with the capability to generate outputs in the same formats. This multimodal fluency enhances accessibility and the model’s application across diverse use cases. - Agentic Action:

Unlike its predecessors, Gemini 2.0 introduces agentic abilities, enabling it to think multiple steps ahead and act on behalf of users. These actions include:- Using Google tools like Search, Maps, and Lens.

- Performing complex coding and data analysis tasks through Project Jules, a developer-specific agent.

- Navigating web environments autonomously via Project Mariner.

- Native Tool Integration:

The AI now natively integrates with third-party tools, enhancing productivity in tasks like coding, web navigation, and real-time communication.

Transforming Products Across Google Ecosystem

Google plans to integrate Gemini 2.0 across its products, including Search, Workspace, and the Gemini app. The AI will power more nuanced AI Overviews in Search, enabling users to ask complex, multi-step questions. By 2025, Gemini’s capabilities will expand to products like Google Glasses, offering real-time insights through augmented reality interfaces.

Pioneering Prototypes

Google also introduced prototypes showcasing Gemini 2.0’s potential:

- Project Astra: A universal AI assistant that combines language understanding with contextual memory and real-world reasoning. Astra’s multilingual capabilities and integration with tools like Google Maps make it a standout innovation for daily use.

- Project Mariner: A Chrome extension designed to automate complex web tasks such as shopping or research, achieving an impressive 83.5% success rate on the WebVoyager benchmark.

- Jules for Developers: An agent embedded within GitHub workflows, capable of automating intricate coding tasks like debugging and repository management.

Infrastructure Powering Gemini 2.0

The model’s performance is backed by Trillium, Google’s sixth-generation Tensor Processing Unit (TPU). This cutting-edge hardware facilitates faster, more efficient training and inference, with over 100,000 TPUs deployed across Google’s infrastructure.

Responsible Development

In an era where AI autonomy raises concerns, Google emphasized its commitment to safety. Measures include:

- Extensive testing with trusted users.

- Real-time safety evaluations and mitigation mechanisms against risks like misinformation and data breaches.

- Built-in privacy controls, allowing users to delete sensitive data from the system.

Through rigorous oversight, Google aims to deploy Gemini 2.0 responsibly while unlocking its full potential.

Gemini 2.0: The Beginning of a New Chapter

As Google propels AI into the “agentic era,” the implications for industries, developers, and consumers are profound. Gemini 2.0 not only enhances existing applications but also pioneers new paradigms of interaction with technology. From personal assistants to enterprise solutions, its release sets the stage for a transformative future.

For those eager to explore this cutting-edge innovation, Gemini 2.0 is now available through the Gemini API and Google AI Studio, with broader access expected in early 2025.