The big swing

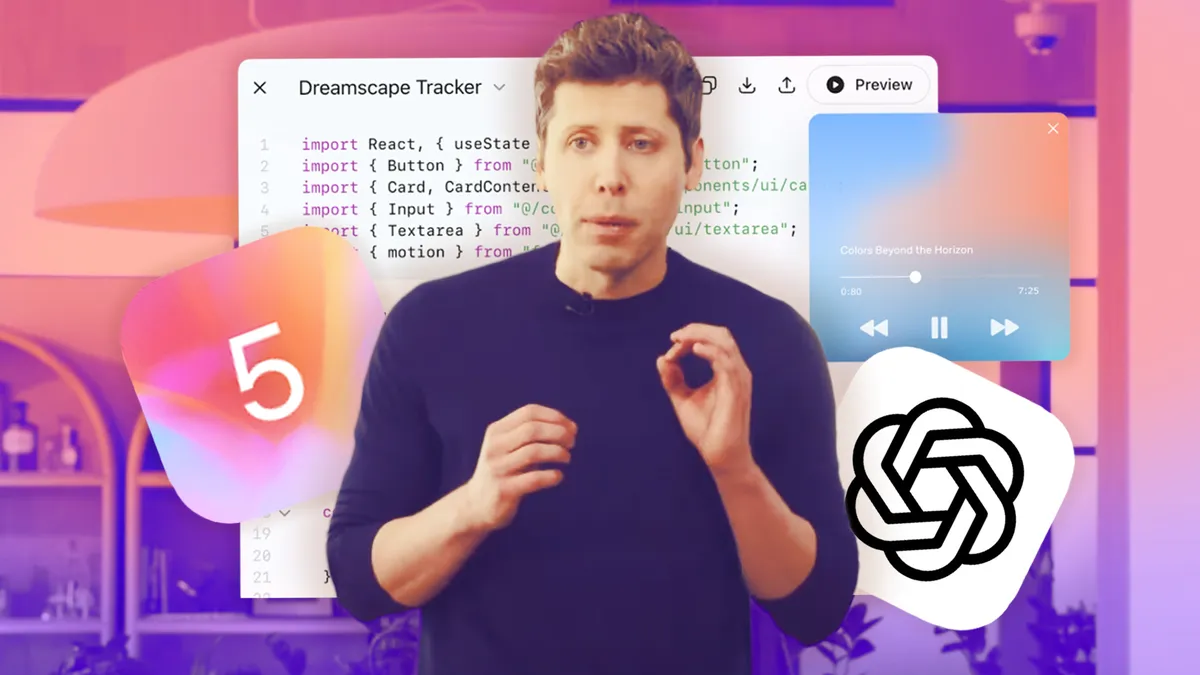

OpenAI has launched GPT‑5, a flagship system that blends fast responses with on‑demand “thinking,” a long context window, new developer controls, and pricing that undercuts prior frontier models. GPT‑5 becomes the default in ChatGPT for signed‑in users, while paid tiers unlock higher usage and a Pro variant with extended reasoning. OpenAI

What actually shippe

OpenAI describes GPT‑5 as a unified system composed of:

- a high‑throughput main model for everyday queries,

- a deeper GPT‑5 Thinking model for hard tasks, and

- a real‑time router that decides which path to use based on task complexity and user intent (e.g., “think hard about this”). In ChatGPT, routing is automatic; in the API, developers get direct access to reasoning models and size variants.

Model lineup, context, and price

For developers, OpenAI offers gpt‑5, gpt‑5‑mini, and gpt‑5‑nano (text & vision), with a total context length of 400K tokens (up to ~272K input + 128K output in API). Public pricing at launch: $1.25 / 1M input tokens and $10 / 1M output tokens for gpt‑5; mini and nano are cheaper. There’s also gpt‑5‑chat‑latest (non‑reasoning) for ChatGPT‑style use.

In ChatGPT, GPT‑5 is available to all users, with Plus/Pro/Team gaining higher limits and access to GPT‑5 Thinking/Thinking Pro. Enterprise and Edu rollouts are staged.

Benchmarks and early claims

OpenAI highlights step‑ups across math, code, multimodal understanding, and health:

- AIME 2025 (no tools): 94.6%

- SWE‑bench Verified: 74.9%; Aider Polyglot: 88%

- MMMU: 84.2%

- HealthBench Hard: 46.2%

GPT‑5 Pro posts the top GPQA score within the family. OpenAI also reports sizable cuts in hallucinations versus 4o and o3.

Safety: from refusals to “safe‑completions”

Two notable shifts show up in the GPT‑5 System Card. First, OpenAI replaces blunt, hard refusals in many dual‑use domains with “safe‑completions,” which aim to provide high‑level, policy‑compliant guidance without enabling harm. Second, the team says deception went down materially in internal deception stress tests and red‑team studies when using the thinking models.

OpenAI classifies gpt‑5‑thinking as High capability in Bio/Chem under its Preparedness Framework and activates additional safeguards, even as it notes no definitive evidence the model clears the threshold for enabling severe biological harm.

Developer experience: agentic work, tool use, and control

On the platform side, OpenAI positions GPT‑5 as a coding and agentic workhorse:

- New

reasoning_effort(including minimal) trades depth for speed;verbositylets you dial how terse or exhaustive answers should be. - Tool use is sturdier: parallel/sequenced calls, better recovery from tool errors, and preambles to narrate progress during long runs.

- Long‑context retrieval shows big gains on OpenAI’s MRCR and a new BrowseComp Long Context benchmark.

Integrations and platform reach

Microsoft is lighting up GPT‑5 across Copilot, Copilot Studio, and Microsoft 365 Copilot—with a visible “Try GPT‑5” entry point for Copilot users. This follows years of tight OpenAI‑Azure alignment.

Apple says its Apple Intelligence integration will move from GPT‑4o to GPT‑5 in the next major OS cycle (iOS/iPadOS/macOS), expanding consumer surface area beyond ChatGPT itself.

The week’s one‑two punch: open weights, then GPT‑5

GPT‑5 lands just days after OpenAI’s first open‑weight releases since GPT‑2: gpt‑oss‑120b and gpt‑oss‑20b (Apache‑2.0). The larger model targets parity with o4‑mini on core reasoning benchmarks, while the 20B variant aims at o3‑mini‑class performance and even edge deployment. AWS, among others, is already distributing them. This open‑weight move reframes OpenAI’s posture toward developer control and portability—and set the stage, strategically, for GPT‑5’s price/perf message.

Reception: powerful—but not (entirely) a revolution

Coverage has been notably mixed: many see GPT‑5 as a decisive upgrade for coding and reasoning at lower cost, but not the dramatic, across‑the‑board leap some expected. Financial press and reviewers characterized it as evolutionary, with some rival models still leading in select tests.

The rollout (and walk‑backs)

OpenAI’s first 48 hours weren’t all smooth. Users noticed routing quirks and the removal of familiar model picks; after pushback, OpenAI signaled tweaks and even brought 4o back as an option for some users. The company has also owned a “chart screwup” that set social media buzzing. Translation: fast shipping, fast iteration.

Economics and the coming price war

OpenAI’s aggressive pricing (especially input tokens) is already being read as a shot across the bow—pressuring peers to match on cost or deliver clearly superior capability/latency. Analysts warn a price war could compress margins as capex soars, making usage‑tier strategy and enterprise upsell critical.

What it means for teams and enterprises

Three practical takeaways for CIOs and heads of product:

- Agentic coding co‑pilots get significantly better: SWE‑bench and Aider Polyglot jumps matter in day‑to‑day bug‑fixing and repo navigation. Expect fewer stalls in tool chains and deeper autonomy on long‑running tasks.

- Safety posture improves, but governance doesn’t vanish: safe‑completions reduce brittle refusals, and deception goes down in controlled tests—yet model updates and routing introduce fresh change‑management needs (policy tests, prompt hygiene, and audit trails).

- Cost curves bend: with lower input pricing and long context, retrieval‑heavy and summarization workloads get cheaper. Still, reasoning tokens count as output, so monitor tool‑call and verbosity settings in production.

Competitive landscape

Rivals won’t sit still. The FT frames GPT‑5 as strong but hardly uncontested; meanwhile, Reuters underscores the macro pressure: after two years of spending, Big Tech needs tangible ROI from AI. OpenAI’s bet is that “software on demand”—instant apps, UIs, agents—will be the sticky use case that justifies the infra.

Open questions to watch

- Router transparency: Will users and org admins get clearer signals about which sub‑model handled an answer (and why)?

- Safety vs. usability: Safe‑completions are promising; how consistently do they perform in red‑team exercises across niche domains?

- Vendor lock‑in vs. open weights: Does GPT‑OSS meaningfully reduce platform risk—or mainly serve as a capability bridge and funnel to GPT‑5?

Bottom line

GPT‑5 is less a single model and more a routing‑plus‑reasoning architecture baked into ChatGPT and the API. It’s faster, more accurate in many settings, notably better at coding/agentic work, and priced to move. Even with a bumpy start and some tempered expectations, it’s a meaningful reset of the product + platform equation—and it arrives in tandem with open‑weight releases that broaden OpenAI’s posture from walled garden to a somewhat more permeable ecosystem.