Chinese AI startup Z.ai—formerly Zhipu AI—has intensified the global competition in generative AI with the launch of its most advanced large language model to date: GLM-4.5. Unveiled at the 2025 World AI Conference in Shanghai, the model is not merely a performance leap—it is a deliberate geopolitical and technological statement. Positioned as China’s most powerful open-source model yet, GLM-4.5 incorporates a sophisticated mixture-of-experts (MoE) architecture, a dual-mode reasoning system, and is purpose-built for scalable agentic AI capabilities such as autonomous coding, browsing, and tool interaction. What sets this model apart is not just its technical depth, but its strategic accessibility—it is fully open-source under a permissive MIT license and optimized for both enterprise and individual use.

GLM-4.5 is structured with 355 billion total parameters, of which only 32 billion are activated during inference, reflecting a refined MoE approach focused more on depth than width. This not only minimizes compute cost but enhances reasoning consistency. Its lighter sibling, GLM-4.5 Air, scales down to 106 billion total and 12 billion active parameters, making it feasible to run on commercial GPUs like Nvidia’s RTX 3090 or 4090. This dual-strategy model release is clearly crafted to democratize access across use cases, from AI researchers in academia to startups deploying in production environments.

What truly elevates GLM-4.5 is its hybrid interaction architecture. It supports two distinct “thinking modes”—a fast-response, low-latency mode for standard prompting, and a deeper, agentic mode that enables complex multi-step reasoning, tool use, and planning. These are powered by a combination of novel techniques including Grouped Query Attention, Muon optimization, QK-Norm stabilization, and speculative decoding via MTP (Multi-token Prefetching). The end result is a model capable of sustained context management, goal-driven reasoning, and responsive application generation—all critical capabilities for future AI agents.

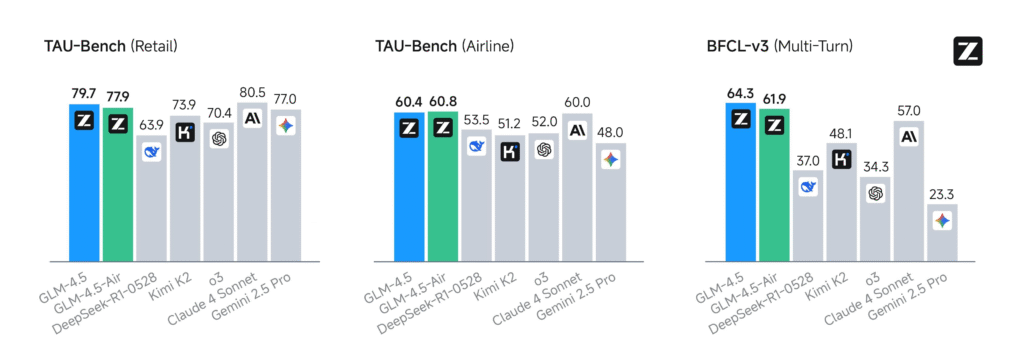

Benchmark performance validates its high-end classification. Across 12 globally recognized evaluation sets—including MMLU Pro, AIME 2024, MATH500, GPQA, and HumanEval—GLM-4.5 consistently ranks within the top three, sometimes outperforming Claude-3 Opus and DeepSeek-VL. For instance, on MMLU Pro it scores 84.6%, while achieving 91% on AIME24 and 98% on MATH500. In web-browsing benchmarks like BrowseComp, it achieves 26.4% accuracy, well ahead of Claude-4 Opus (18.8%) and nearly matching o4-mini-high (28.3%). Its capabilities also shine in coding benchmarks: scoring 64.2% on SWE-bench Verified and 37.5% on Terminal-Bench, it convincingly outperforms major competitors in real-world programming tasks. During multi-round head-to-head agentic coding tasks, GLM-4.5 wins 53.9% of sessions against Kimi K2 and 80.8% against Qwen3 Coder. It boasts a tool-use success rate of over 90.6%, indicating strong multi-function orchestration potential.

Yet beyond benchmarks, GLM-4.5’s practical utility is its strongest asset. The model can generate complete web applications, HTML5 games like Flappy Bird, complex Python simulations, SVG graphics, and Beamer presentations—all through natural language interactions. Z.ai has integrated it with full-stack coding tools and provided rich support via Hugging Face, ModelScope, and vLLM inference frameworks. In essence, it serves not just as a model but a modular AI development platform. Its efficiency model is equally competitive. It delivers a context window of 128k tokens, operates at 76 tokens per second, and responds within 0.68 seconds for most prompts. With a blended cost of just $0.96 per million tokens—or less depending on quantization and hardware—GLM-4.5 undercuts nearly all major U.S. rivals in pricing, including proprietary offerings from Anthropic and OpenAI.

GLM-4.5 is also underpinned by “slime,” Z.ai’s own reinforcement learning framework, designed to fine-tune agentic models at scale across environments. This is key to its long-horizon reasoning capabilities and opens the door for continual skill adaptation. Z.ai’s open-source approach aligns with broader national efforts in China to develop sovereign AI capabilities independent of U.S. cloud infrastructure and licensing restrictions. The release is part of a broader surge in open models from Chinese developers, including DeepSeek, Alibaba Qwen3, and Moonshot AI—each leveraging open architectures to compete on scale, usability, and domain specialization.

In contrast to recent moves by Meta and OpenAI to tighten model access and API control, Z.ai is pushing aggressively in the opposite direction: maximizing openness and speed of adoption. The release of full weights, inference code, and documentation invites widespread deployment, modification, and collaboration—both domestically and globally. This strategy has major implications. It provides China a strategic buffer against regulatory pressures, promotes talent development across the nation’s universities and research centers, and cultivates an open ecosystem where innovation can be decentralized and fast-moving.

In global context, GLM-4.5 is an unmistakable message: high-performance agentic AI does not have to be proprietary. By delivering cutting-edge performance through an accessible platform, Z.ai has not only challenged the prevailing market logic of the West’s AI giants—it has democratized an entire class of intelligent systems. As LLMs become the core substrate of future productivity, creative work, and scientific exploration, GLM-4.5’s arrival marks a pivotal moment where cost, openness, and performance begin to align in new ways. And in this alignment, the future of AI may look less like the gated enterprise APIs of today and more like a globally accessible innovation commons.